MISO JOA

Midcontinent Independent System Operator Joint Operating Agreement Microservices

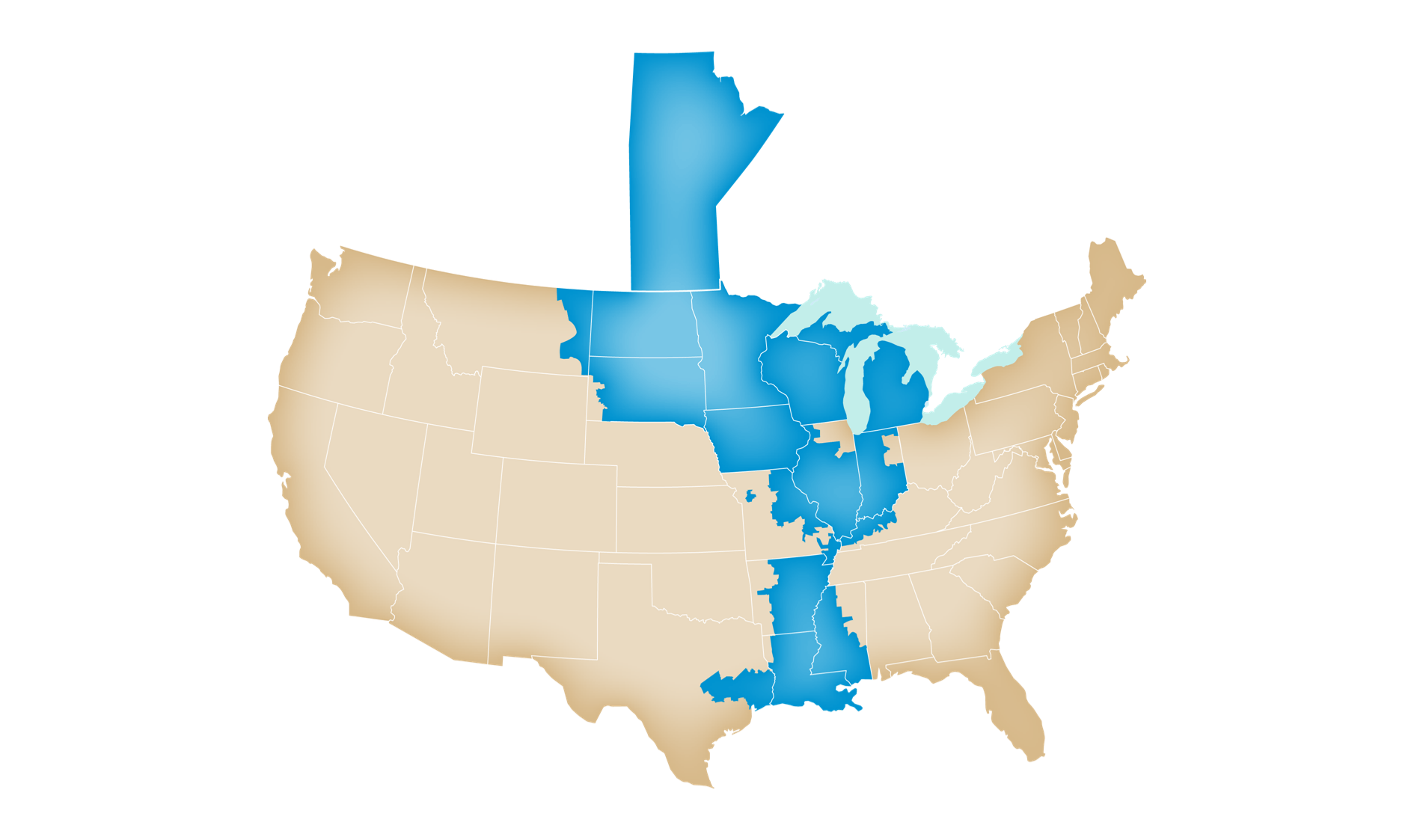

The MISO JOA system, was a collection of data exchanges of energy and transformer information at various locations across the US Midwest. The exchange was between the US energy distributors (MISO partners) and MISO itself.

This project has been handed through multiple teams and iterations. Previous phases investigated the idea of a total rewrite. With the existing codebase being 10+ years old, coupled with outdated documentation, there wasn’t enough context or confidence that a rewrite would be possible with minimal error. In our phase, we opted to migrate smaller modules into microservices.

At a high level our tasks were as follows:

- Outline the modules we can decouple, and document the interfaces for them.

- Define a Spring Boot microservice, and migrate the module to this new microservice

- Containerize the deployment to make it easier to scale with Kubernetes

- Define a deployment schedule/process for modules to reduce data corruption

- Establish and onboard a process of alerting and logging with the new deployment

JBoss Interface <-> Module Outline

For the module outline, we noticed that the JBoss already had sections of code that communicated via Java Messaging Queues (JMS queues), so I generated a CSV containing the following information:

JMS Queue subscribed <-> Section <-> published to JMS Queue

I created a diagram based on this information, resulting in 15 modules and 25 queues. Much of the system operated on CRON job triggers, inserting messages into the JMS queues and topics. Those operations would trigger a data exchange between MISO and it’s partners.

Aside from the JMS queues, there were only a couple of REST and SOAP endpoints we found (for communicating with external MISO partners), and we documented that in the outline as well.

Spring Boot Microservice

Spring Boot 2.x with Java 11 (and Java 17 where possible) was the requirement the client enforced, as their developer pool consisted of Java developers.

We defined a service template for all microservices to use, and we would copy the JBoss module (Java 7) with the existing git history into the new codebase. We applied the service template, fixed compilation issues, and update any unit tests to properly utilize dependency injection.

In terms of service-to-service communication, I mapped the usages of JMS queues to Kafka topics. With Kafka being pub/sub and JMS queues being point-to-point, we set the expectation that instances of only one microservice may subscribe to the topic.

Containerized Deployments

Kubernetes and Docker were the approved technologies that MISO had expected our teams to use for the purposes of cluster management and containerizing services.

We made sure there was an internal image repository set up, and helped set up BlackDuck and Sonarqube to make sure artifacts were audited. Images were built as a part of CI and deployed when integration/unit tests pass.

Internal/external requests were firewalled, and communication with the Kafka cluster was authenticated via certificates.

Microservice Deployment

With the aim of a seamless deployment, I proposed a few options for deployment:

- deploy one service, introduce code on legacy and modern deployments to service as a mediator for communication in a simultaneous deployment

- deploy multiple services, where each group had no other microservice dependency. Example: Group Alpha (Service A, B, C) did not ever communicate with Group Beta (Service D)

- Big-bang deployment: deploy everything at once, turn off legacy once the modern deployment is healthy

There were a few attributes for the deployment that we could not change:

- the database must be shared in all microservices

- the legacy job scheduler and new scheduler must run simultaneously

Option one gave us the benefit of a minimal impact, but the mediator required introducing a lot of change into legacy that could potentially break it (the existing codebase was on Java 7). Due to the older version of Java, it was difficult to find actively maintained libraries that could securely communicate with Kafka, REST, and SOAP.

To further clarify option two, we would deploy the new service-group, and turn off the old corresponding module-group in the legacy codebase when the new deployment was healthy. This gave us the ability to deploy code with minimal impact to the legacy. If issues arose, it would be much easier to turn on legacy and reroute the requests. The testing surface of the deployment is larger than option one, and to minimize this we tried to keep the groups no larger than three microservices.

Option three had the largest risk as we would be turning on the entire deployment at once, and shutting the entirety of the legacy system shortly after. This option was only considered as much of the system was thought to be brittle, and turning off pieces of the legacy system may break the entire deployment.

Because the impact on legacy was the smallest, we opted for option two in terms of deployments. Once a deployment had succeeded we would trigger a suite of Postman acceptance tests, then asked internal MISO teams to make sure their integrations were still functional.

In terms of scheduling the deployments, most of the deployments were triggered via scheduled CRON jobs. So in higher environments, we would deploy them after a job has completed.

Splunk Alerting

Prior to any observability tool, logs were all written to files on a VM, or to files in attached NAS mounts. This would not be functional on Kubernetes pods as they are expected to tear down and spin up dynamically.

I had reached out to internal teams at MISO to see what alternatives were in the works, and Splunk + Grafana had the closest fit to the observability needs of a microservice architecture. Additionally, these tools were approved and satisfied the auditing needs of the MISO organization. Splunk had the ability to stream and search logs, as well as configure alerts due to conditions a developer defines. Grafana had the ability to configure alerts based on memory usage, and other pod metadata.

I had set up onboarding and demo meetings with existing engineers to assess interest, and drive buy-in. I worked with MISO teams to set up alerts, and created documentation around creating alerts and searching through logs.

For the non-developers, I introduced the notion of Splunk dashboards. This was important, as we could leverage the dashboards api to give the support teams autonomy to monitor and create their own tools against our system (or any system at MISO). I also documented and provided examples to serve as aides.

Next steps

Microservices should be as decoupled as possible, so ideally I want to prioritize:

- individual databases

- proper distributed transaction

The amount of requests to individual microservice groups were so low, a distributed transaction wasn’t necessary; the databases were also shared across microservices. However, to be properly decoupled, each microservice should have its own database instance. This would absolutely require some distributed transaction mechanism (like SAGAs), as tables that were accessible may no longer be accessible in this change.

With the notion of continuous deployment, we should be aiming for less manual testing and more automated testing. For the REST/SOAP interfaces, Postman should be the tool to use. For the kafka tests, it might be worth exposing some endpoints on lower environments. Tests could POST to these endpoints and their outputs should be verified afterwards.

For better visibility, I want to create a dashboard indicating healthy/ready status of all the services in each environment.